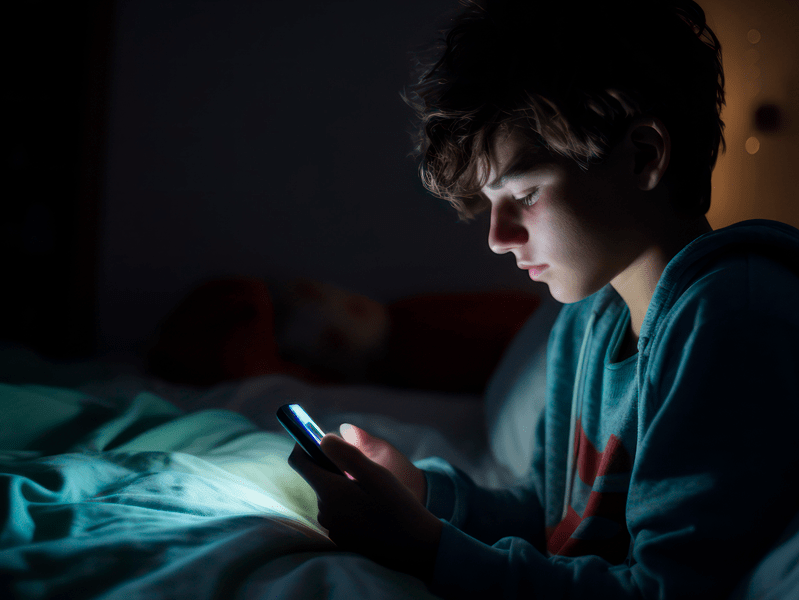

We’ve all had that wow moment when something we thought possible only in the imagination of sci-fi writers suddenly exists at our fingertips! Just when we think we have seen everything technology has to offer, something new comes along.

These moments can stop us in our tracks and make us wonder about what could be next. At INEQE Safeguarding Group, we have seen a lot of new apps and platforms utilising deepfakes. The big question is: should you be worried?

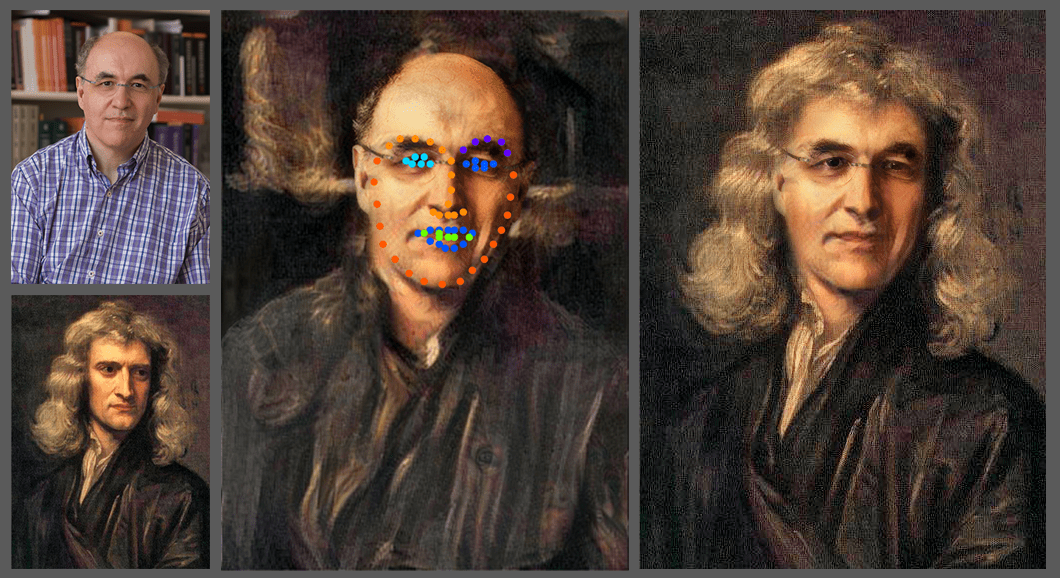

You may have seen (and laughed) at some funny videos that use deepfake technology. For example, videos in which celebrities, friends, or relatives have had their faces manipulated to sing, dance, or even tell jokes. When deepfakes are used in this way, they are unlikely to cause serious harm.

However, there is a much more worrying and disturbing side to how deepfakes are being used. Read our guide to deepfakes to find out how you can protect the children in your care as this technology continues to evolve.

©commons.wikimedia.org/wiki/File:Sw-face-swap

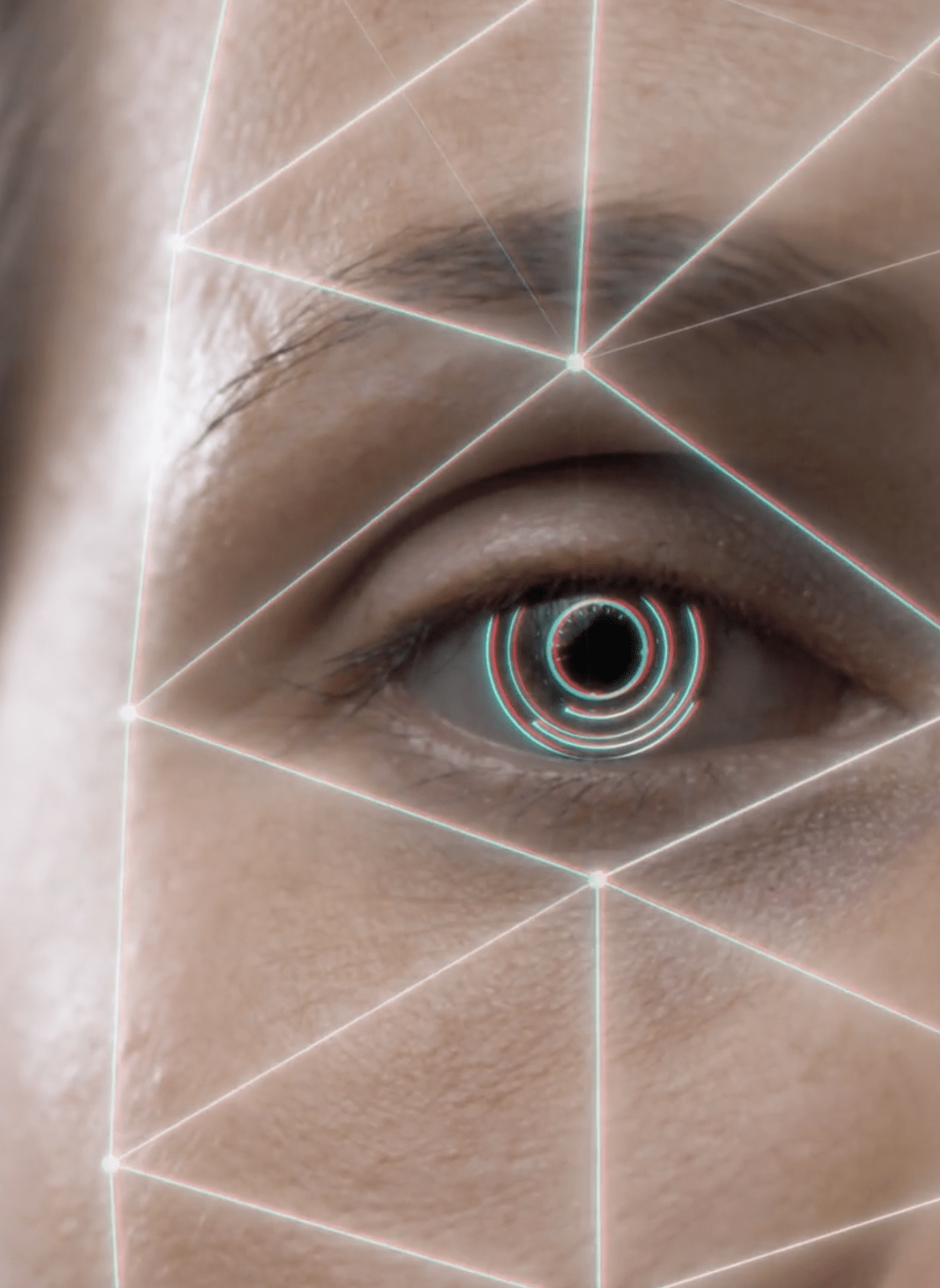

The Harmful Side of Deepfakes

There are already several ways deepfakes are being misused to cause distress and harm. As this technology evolves and becomes more accessible to users, it is important to include in this guide to deepfakes some of the ways it can be misused.

Bullying

Deepfakes have been used in cases of cyberbullying to deliberately mock, taunt, or inflict public embarrassment on victims. The novel appearance of these images may distract from the real issue that they can be used to bully or harass children and young people.

Extortion and Exploitation

Deepfakes can be used to create incriminating, embarrassing, or suggestive material. Some deepfakes are so good that it becomes difficult to distinguish between them and the real thing. Convincing other people that an embarrassing or abusive image is fake can create additional layers of vulnerability and distress. These images can then be used to extort money or additional ‘real’ images.

Additionally, deepfakes can be used to create so-called ‘revenge porn’. This is a form of image-based sexual abuse as retaliation or vengeance typically associated with the end of a relationship or not agreeing to a sexual relationship with the perpetrator.

In the past, deepfakes were often used to create pornographic materials of famous women. With the tech becoming more accessible to use, non-famous people are increasingly becoming victims too.

Deepfakes can also be used as a form of homophobic abuse, in which a person is depicted in gay pornography. This could then be used to ‘out’ the person or as an attempt to ‘destroy their reputation.’ For young people struggling with their sexual orientation, being depicted in any sexualised deepfakes may be particularly distressing.

Image-Based Sexual Abuse

There have been cases where images of children have been harvested and used to generate sexualised deepfakes. The realistic depiction of a victim engaging in a sex act can damage a child’s wellbeing and mental health. We know that deepfake software can be used to remove clothing from victims digitally. In some cases, there are commercial services where users can pay to have images professionally manipulated.

It is important that parents, carers, and safeguarding professionals are aware of the risks of this form of (non-contact) sexual abuse. In some cases, victims themselves may be unaware that their images have been harvested and misused to create deepfakes.

While many children and young people may be aware of and understand how images can be manipulated in this way, others may not. It is important to speak to them about the issue of deepfakes and how they can be misused.

Further Resources

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.