Reading Time: 8.2 mins

February 22, 2023

Due to the popularity of online gaming platform Roblox with children and young people under the age of 16, our online safety experts have released this Safeguarding Update about a collection of games trending within the platform. The games depict themes of isolation, cutting, and suicide with some including chatrooms where users engage in unmoderated discussions around hopelessness, depression, self-harm, and suicide.

With 75% of children not receiving the mental health assistance they need, it is worrying that vulnerable children may be turning to these kinds of online communities for support and advice.

Several of these depression room games on Roblox have up to 5.6 million visits and are advertised as being appropriate for ‘all ages’. Despite being explicitly against the platform’s Terms of Service, Roblox have failed to remove most of these harmful games from the platform at this time.

We have summarised the main risks and concerns associated with children accessing these games, as well as practical steps you can take to mitigate harm to the children in your care.

If you are worried about a child’s mental health or suspect that a child is in danger, it is essential to act immediately. Do not hesitate to call the police or contact relevant medical professionals.

What are ‘depression rooms’?

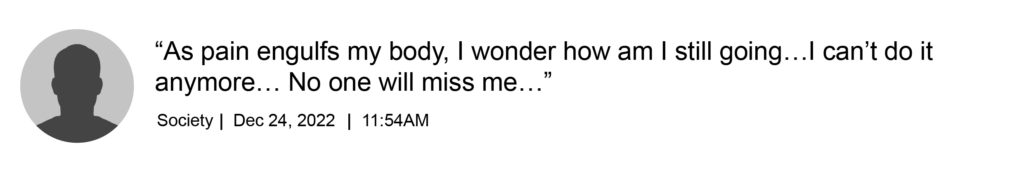

According to a study by Metro, the term ‘depression room’ is used to describe a game on Roblox that uses sad, melancholic themes to portray depression or suicide. Inside these ‘rooms’, players can act out depressive behaviours such as crying beside gravestones or sitting alone in dimly lit basements with their head bowed. Some games even allow players to simulate suicide by jumping off a bridge or performing self-harm via cutting with a table of knives, leaving the avatar bloodied and broken.

Other rooms encourage users to access a group chat called ‘sociopath’. This chat allows young people to reach out for support from other members in dealing with their feelings. However, this often leads to a vacuum of negative comments about suicide, self-harm, and depression which can overwhelm any positive support given.

*Direct quotes from Metro

We contacted Roblox to request the urgent removal of these games that violate their Terms of Service and asked them why certain games (such as the ‘cutting simulator’) had passed their filtering system. Despite providing images, names, and direct links to the games on multiple occasions, Roblox have only removed one of the games we reported and failed to remove others at this time. We are continuing to report the games through standard mechanisms and are monitoring the situation.

How does my child know about this?

Seeking Support

Given the pressures on mental health services such as CAMHs, we know that accessing timely and adequate support from health professionals has become a challenge. Online communities such as ‘depression rooms’ and the associated chatrooms can offer a space for struggling children to feel heard, supported, and listened to without fear of what might happen to them if they told an adult in their life. Sadly, the advice provided is often unmoderated and can be a vacuum for negative comments.

If you are worried about a child or young person in your care, please seek help from emergency services.

Algorithms

Vulnerable young people may choose to seek out online material related to suicide, depression, and self-harm as a way to anonymously explore their feelings. However, social media and gaming platform algorithms will promote similar content to the user. This was seen in the tragic case of Molly Russell, who was sent emails from Pinterest after viewing harmful content on the platform, highlighting more posts under the topic of ‘depression’.

Streamers

While the children and young people in your care may not have played Roblox or be seeking out this content on the platform themselves, there is another avenue that is becoming almost as popular as the platform itself – streamers. There are hundreds of streamers who record themselves playing games on Roblox and share these videos on platforms such as Twitch, Discord, and YouTube. They rate and review games, let viewers know tips and tricks, and promote popular players and creators.

Poor Filtering

While most social media and gaming platforms have filters to block inappropriate or offensive language, accessing these games and their content often involves using variations of words like ‘depression,’ ‘sad,’ or ‘cutting’, which may not be blocked. For example, instead of using the word “suicide,” some games might use phrases like ‘unalive’ or ‘end my life’. However, the filtering system in place doesn’t always catch games that depict harmful activities, like the game ‘Cutting Simulator’. When users search for terms like ‘cutting’, these games may appear at the top of the results and therefore children could innocently stumble upon this kind of harmful content.

What are the risks?

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.