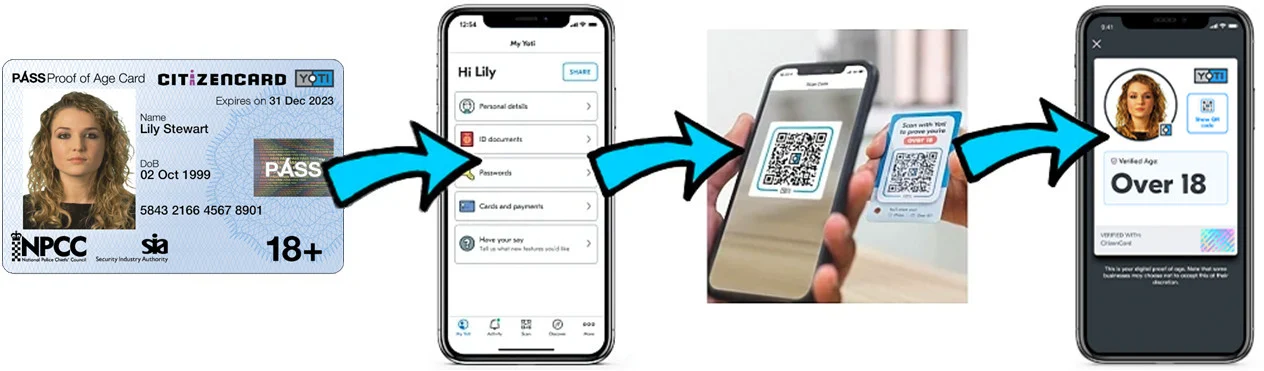

Header Image ©yoti.com

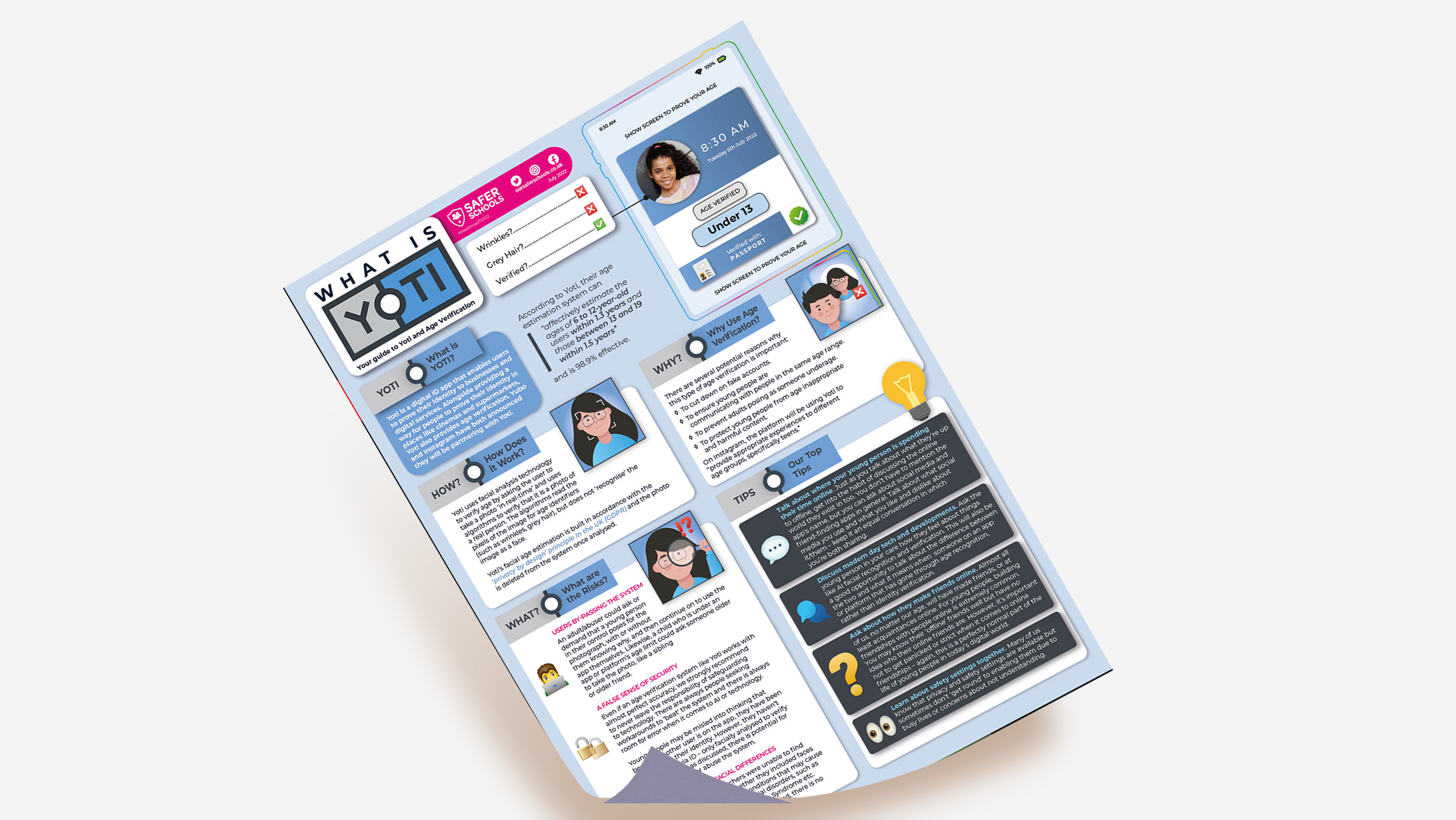

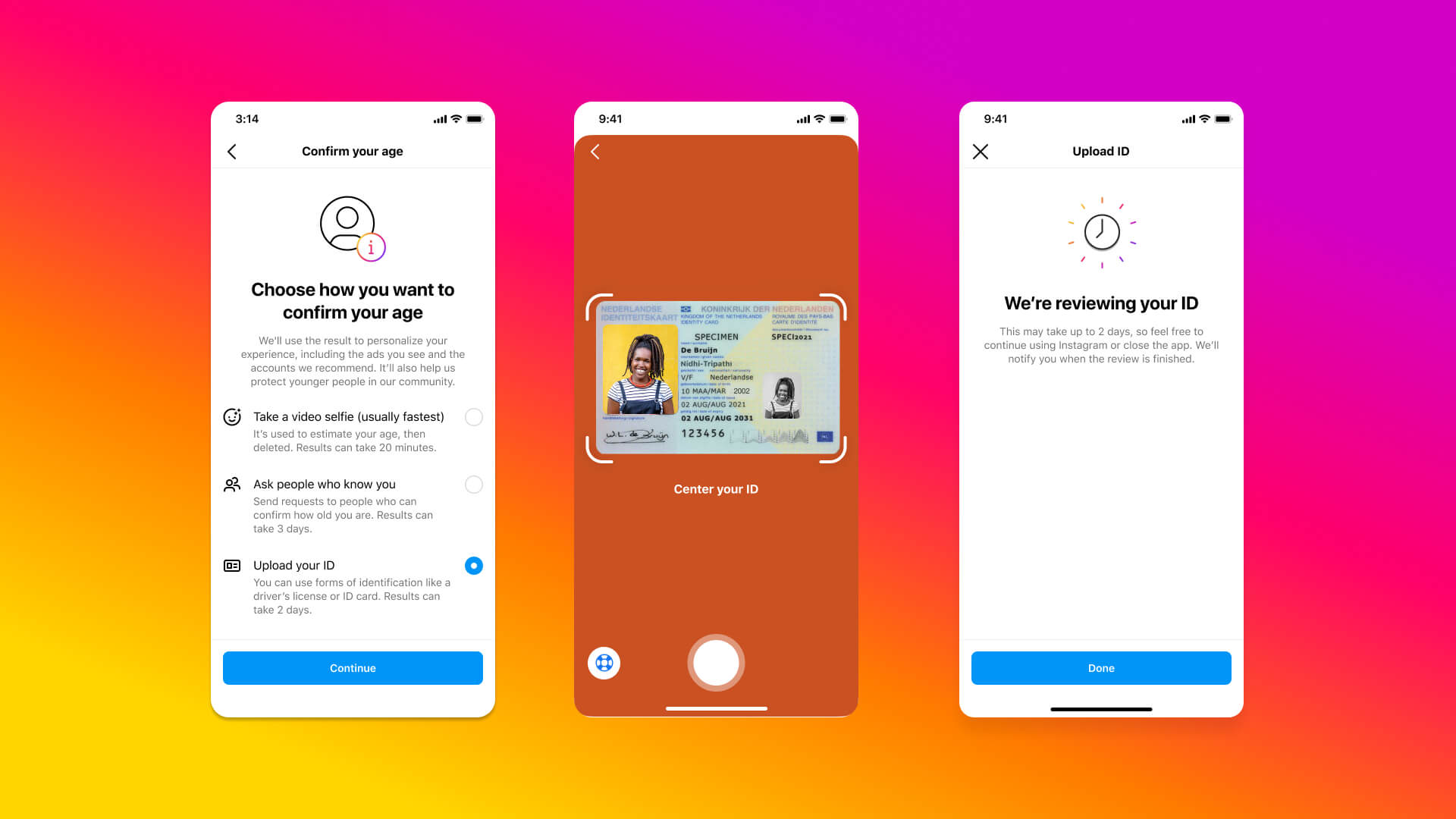

With the recent announcements that both Yubo and Instagram will be partnering with Yoti as part of their new strategy to verify users’ ages, our online safety experts took a closer look at what Yoti is, and whether photo age verification can help keep young people stay safer online.

What is the Yoti App?

Yoti is a digital ID app that enables users to prove their identity to businesses and digital services. It’s been around since 2014 but has been hitting the headlines recently due to their partnerships with social media platforms Instagram and Yubo.

Yoti works both as an independent app which anyone can download and create an account on, and as an integrated software on other apps (like Instagram).

Although Yoti provides several other services, such as the Yoti CitizenCard, we’re going to be focusing on the photo age verification aspect they provide.

Other Yoti Services

How does Yoti work – and is it safe?

Apps, platforms and websites that require age verification will all use different methods. Some will simply ask a user to input their date of birth or tick a checkbox to confirm they are over or under a certain age. These types of age verification methods are easily bypassed for those that wish to do so, and are rarely effective.

Yoti uses facial analysis technology to verify age by asking the user to take a photo ‘in real-time’ and uses algorithms to verify that it is a photo of a real person. The algorithms read the pixels of the image for age identifiers (such as wrinkles, grey hair), but does not ‘recognise’ the image as a face.

Some users may have privacy concerns over Yoti, especially if using the app as a central method of ID and wonder if Yoti is safe to use. Yoti’s facial age estimation is built in accordance with the ‘privacy by design’ principle in the UK (GDPR) and the photo is deleted from the system once analysed.

What’s the Difference Between Facial Analysis vs Facial Recognition?

Whilst often used interchangeably, facial analysis and facial recognition are two different software types with different purposes, with Yoti being the former. Yoti has previously emphasised that their technology is facial analysis software, not facial recognition.

Essentially this means that their software is looking for a face within an image and then analysing it for whatever information it needs (in this case that’s the age of the subject), rather than trying to identify and collect information on the person within the photo.

Currently it seems Yoti is the leading company when it comes to age verification software. However, with the introduction of the Online Safety Bill, there may be more companies joining the competition to create age verification software, as the heightened level of scrutiny increases.

There are several potential reasons why this type of age verification is important:

On Instagram, the platform will be using Yoti to “provide appropriate experiences to different age groups, specifically teens.”

What are Yoti’s Risks?

Users By-Passing the System

An adult/abuser could ask or demand that a young person in their control poses for the photograph, with or without them knowing why, and then continue on to use the app themselves.

Likewise, a child who is under an app or platform’s age limit could ask someone older to take the photo, like a sibling or older friend.

A False Sense of Security

Even if an age verification system like Yoti works with almost perfect accuracy, we strongly recommend to never leave the responsibility of safeguarding to technology.

There are always people seeking workarounds to ‘beat’ the system and there is always room for error when it comes to AI or technology.

Young people may be misled into thinking that because another user is on the app, they have been verified as to their identity. However, they haven’t been verified via ID – only facially analysed to verify their age – and, as discussed, there is potential for users to by-pass or abuse the system.

Racial Biases and Facial Differences

Our online safety researchers were unable to find information by Yoti at this time on whether they included faces of people with disabilities/ conditions that may cause facial differences or cranio-facial disorders, such as Treacher-Collin syndrome, Down Syndrome etc.

Whilst racial bias is apparently limited, there is no further information on this, such as on the adultification of Black children.

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.